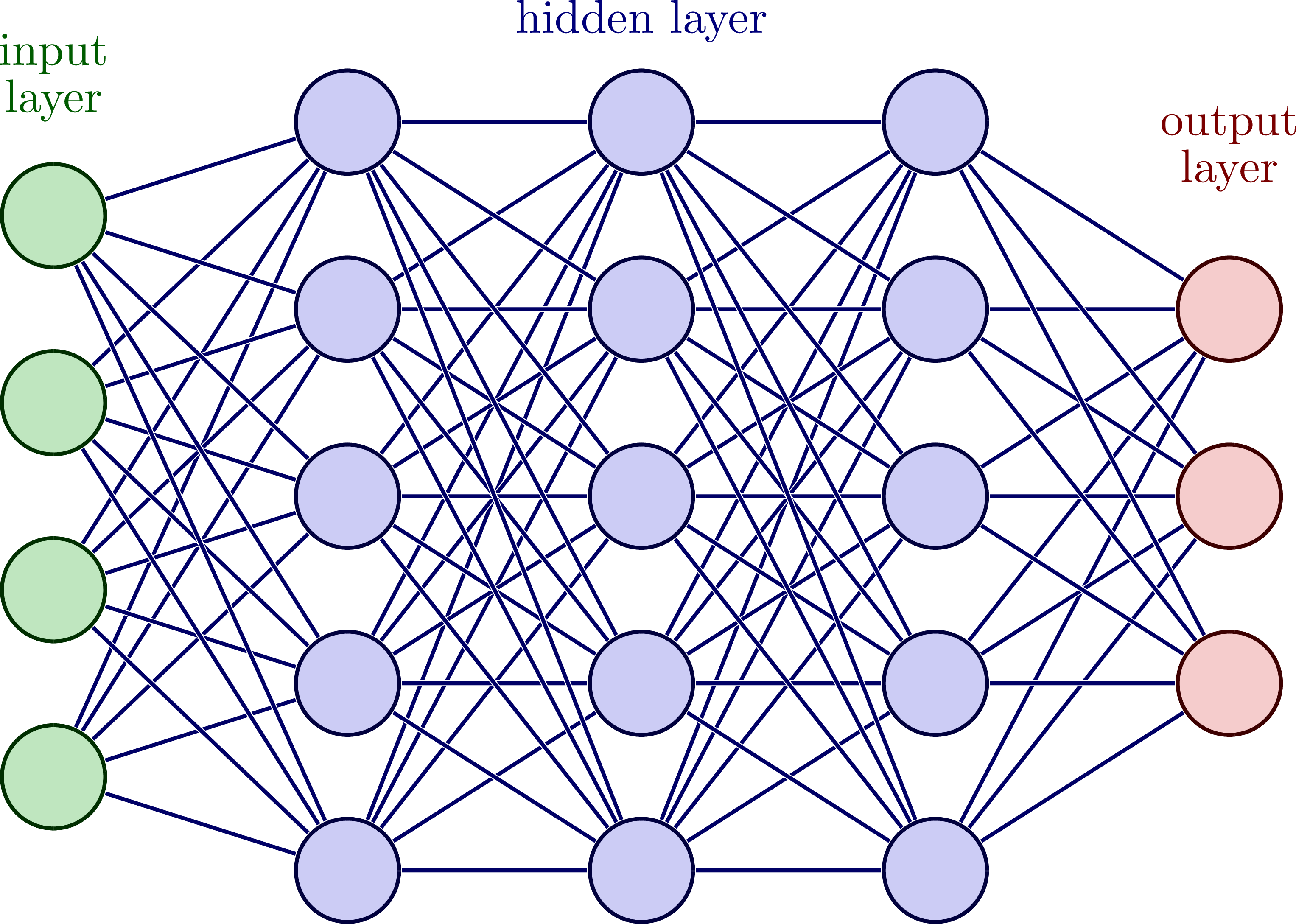

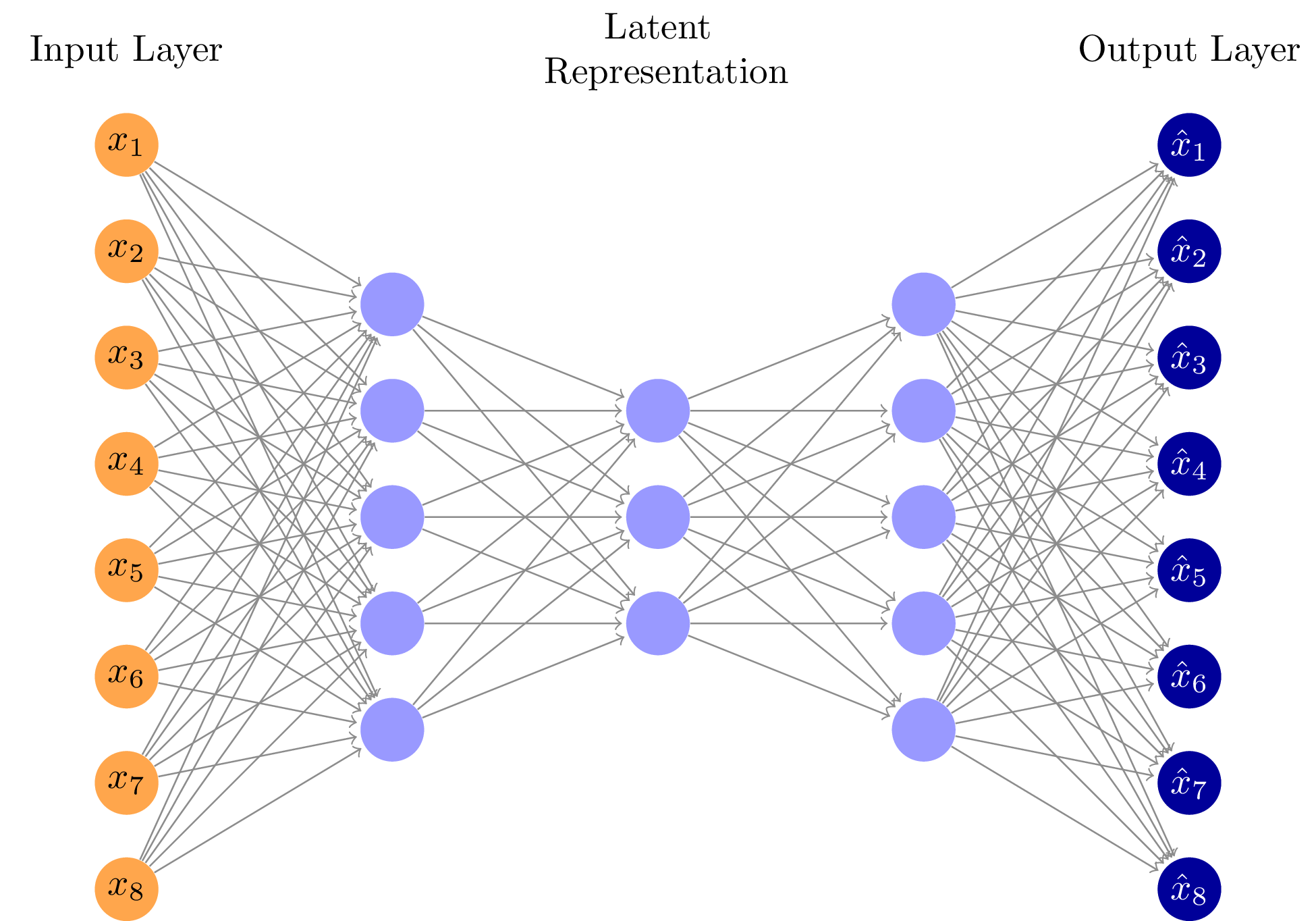

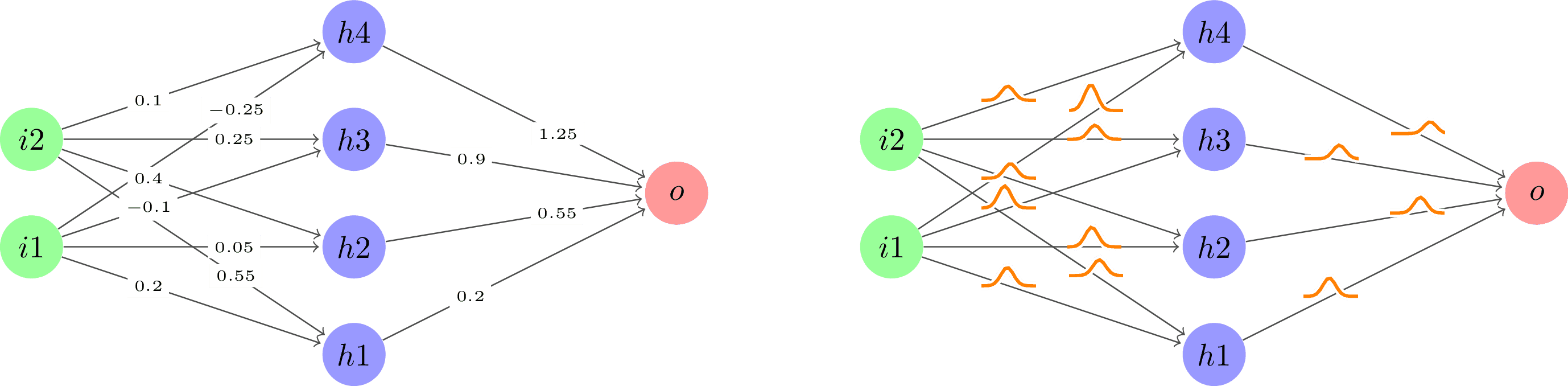

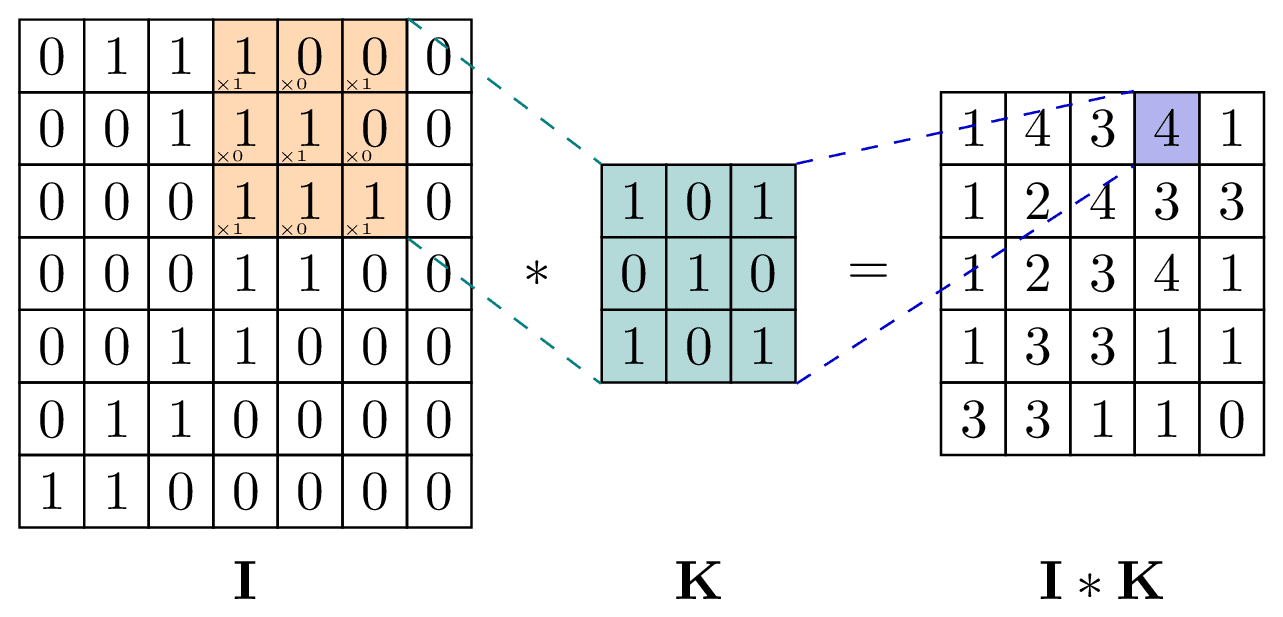

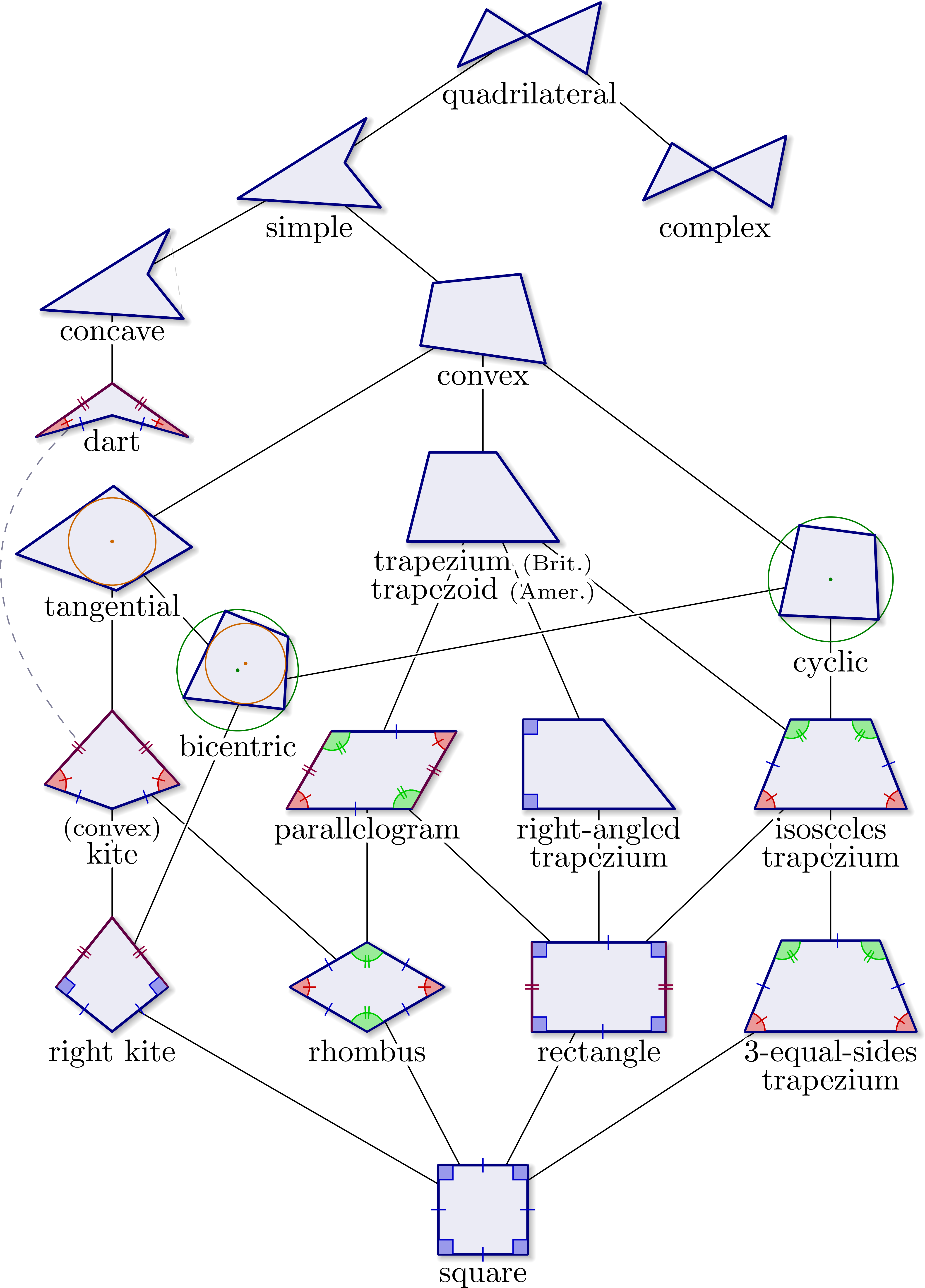

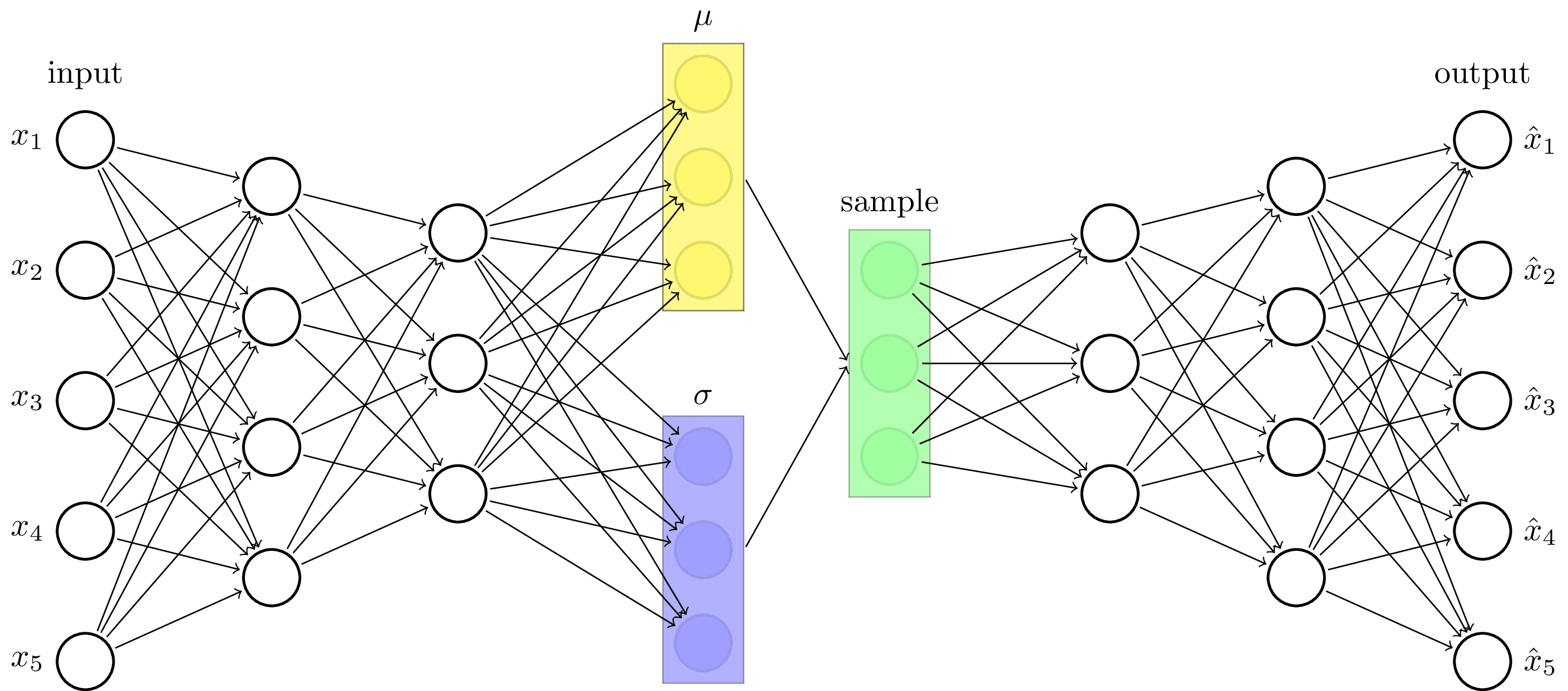

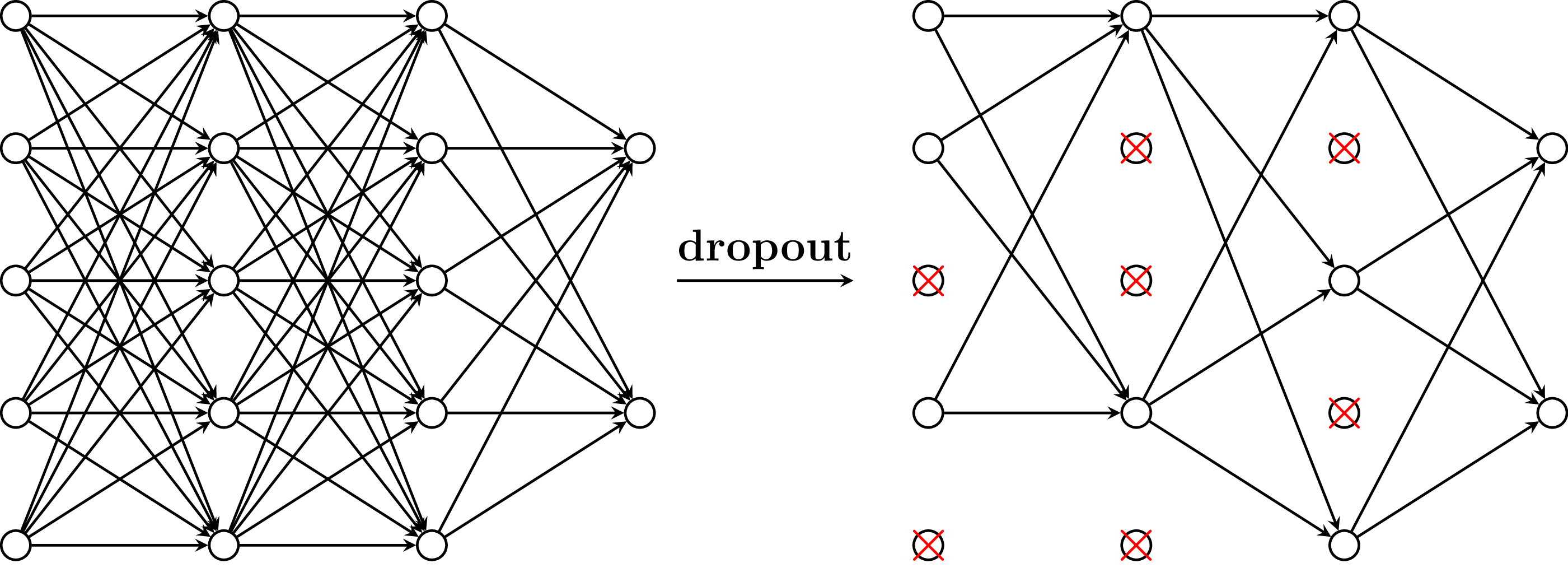

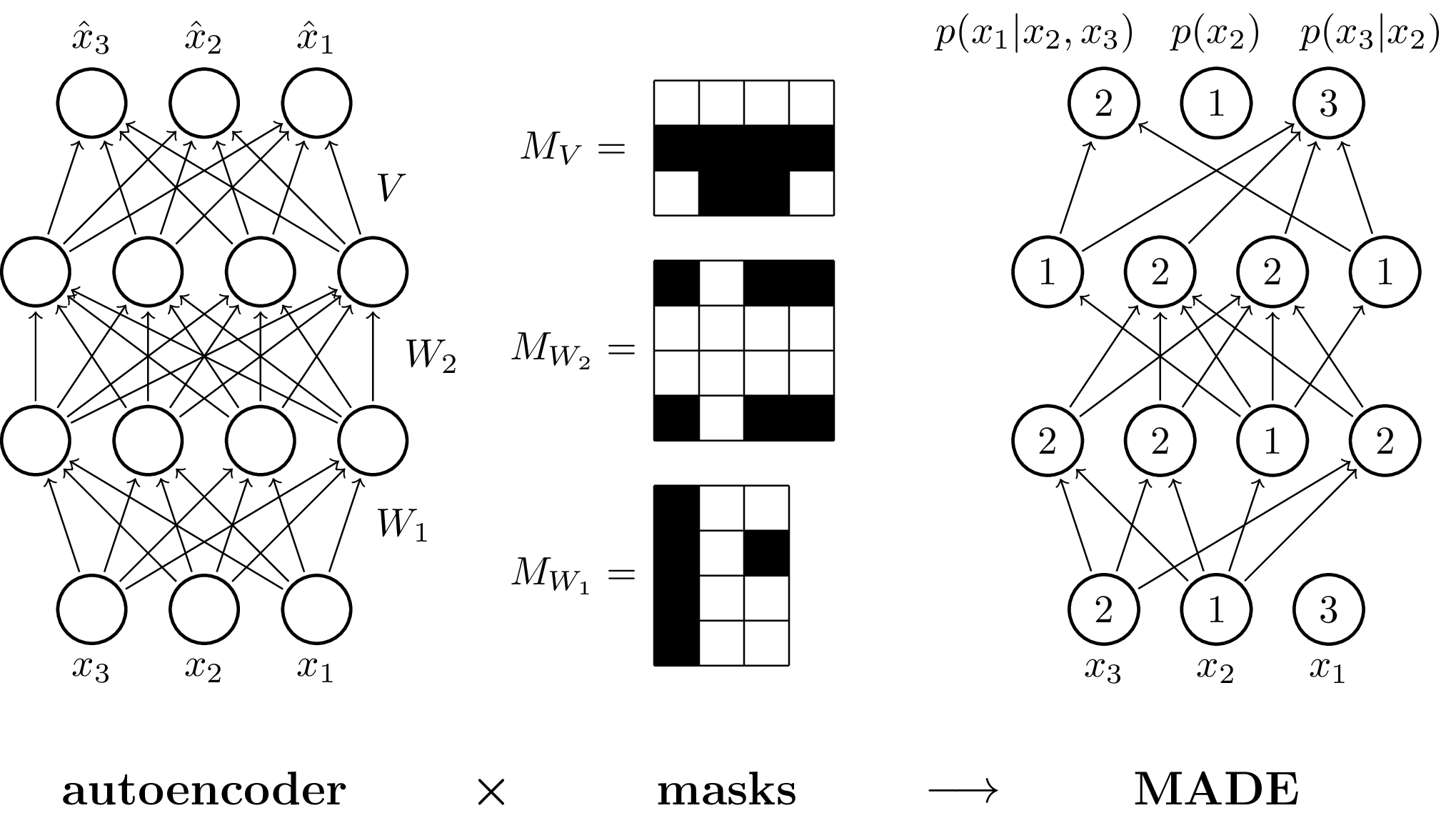

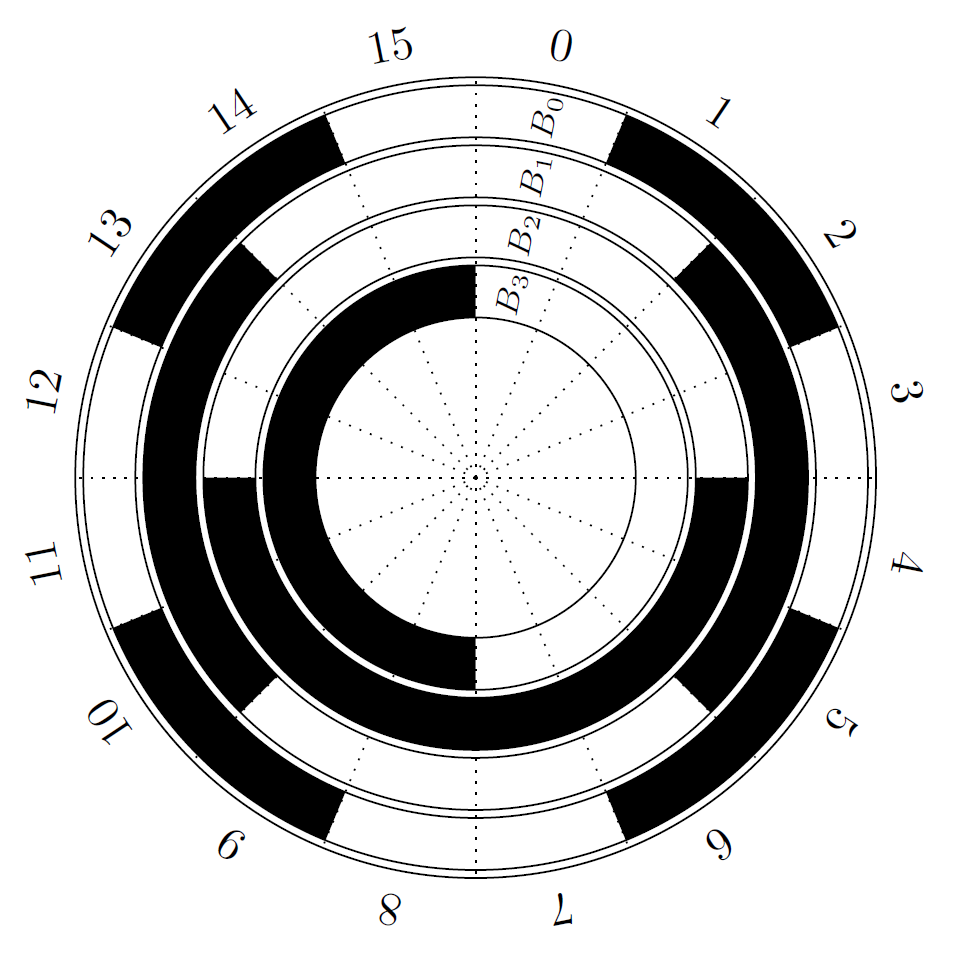

Some examples of neural network architectures: deep neural networks (DNNs), a deep convolutional neural network (CNN), an autoencoders (encoder+decoder), and the illustration of an activation function in neurons.

Basic idea

The full LaTeX code at the bottom of this post uses the listofitems library, so one can pre-define an array of the number of nodes in each layer, which is easier and more compact to loop over:

\documentclass[border=3pt,tikz]{standalone}

\usepackage{tikz}

\usepackage{listofitems} % for \readlist to create arrays

\tikzstyle{mynode}=[thick,draw=blue,fill=blue!20,circle,minimum size=22]

\begin{document}

\begin{tikzpicture}[x=2.2cm,y=1.4cm]

\readlist\Nnod{4,5,5,5,3} % number of nodes per layer

% \Nnodlen = length of \Nnod (i.e. total number of layers)

% \Nnod[1] = element (number of nodes) at index 1

\foreachitem \N \in \Nnod{ % loop over layers

% \N = current element in this iteration (i.e. number of nodes for this layer)

% \Ncnt = index of current layer in this iteration

\foreach \i [evaluate={\x=\Ncnt; \y=\N/2-\i+0.5; \prev=int(\Ncnt-1);}] in {1,...,\N}{ % loop over nodes

\node[mynode] (N\Ncnt-\i) at (\x,\y) {};

\ifnum\Ncnt>1 % connect to previous layer

\foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer

\draw[thick] (N\prev-\j) -- (N\Ncnt-\i); % connect arrows directly

}

\fi % else: nothing to connect first layer

}

}

\end{tikzpicture}

\end{document}

An elegant alternative method is to use the remember option of the \foreach routine, as described in Chapter 88 of the TikZ manual.

\documentclass[border=3pt,tikz]{standalone}

\usepackage{tikz}

\tikzstyle{mynode}=[thick,draw=blue,fill=blue!20,circle,minimum size=22]

\begin{document}

\begin{tikzpicture}[x=2.2cm,y=1.4cm]

\foreach \N [count=\lay,remember={\N as \Nprev (initially 0);}]

in {4,5,5,5,3}{ % loop over layers

\foreach \i [evaluate={\y=\N/2-\i; \x=\lay; \prev=int(\lay-1);}]

in {1,...,\N}{ % loop over nodes

\node[mynode] (N\lay-\i) at (\x,\y) {};

\ifnum\Nprev>0 % connect to previous layer

\foreach \j in {1,...,\Nprev}{ % loop over nodes in previous layer

\draw[thick] (N\prev-\j) -- (N\lay-\i);

}

\fi

}

}

\end{tikzpicture}

\end{document}

This can be generalized into a \pic macro that can be reused more than once in the same tikzpicture with unique names for the nodes:

\documentclass[border=3pt,tikz]{standalone}

\usepackage{xcolor}

% LAYERS

\pgfdeclarelayer{back} % to draw on background

\pgfsetlayers{back,main} % set order

% COLORS

\colorlet{mylightred}{red!95!black!30}

\colorlet{mylightblue}{blue!95!black!30}

\colorlet{mylightgreen}{green!95!black!30}

% STYLES

\tikzset{ % node styles, numbered for easy mapping with \nstyle

>=latex, % for default LaTeX arrow head

node/.style={thick,circle,draw=#1!50!black,fill=#1,

minimum size=\pgfkeysvalueof{/tikz/node size},inner sep=0.5,outer sep=0},

node/.default=mylightblue, % default color for node style

connect/.style={thick,blue!20!black!35}, %,line cap=round

}

% MACROS

\def\lastlay{1} % index of last layer

\def\lastN{1} % number of nodes in last layer

\tikzset{

pics/network/.style={%

code={%

\foreach \N [count=\lay,remember={\N as \Nprev (initially 0);}]

in {#1}{ % loop over layers

\xdef\lastlay{\lay} % store for after loop

\xdef\lastN{\N} % store for after loop

\foreach \i [evaluate={%

\y=\pgfkeysvalueof{/tikz/node dist}*(\N/2-\i+0.5);

\x=\pgfkeysvalueof{/tikz/layer dist}*(\lay-1);

\prev=int(\lay-1);

}%

] in {1,...,\N}{ % loop over nodes

\node[node=\pgfkeysvalueof{/tikz/node color}] (-\lay-\i) at (\x,\y) {};

\ifnum\Nprev>0 % connect to previous layer

\foreach \j in {1,...,\Nprev}{ % loop over nodes in previous layer

\begin{pgfonlayer}{back} % draw on back

\draw[connect] (-\prev-\j) -- (-\lay-\i);

\end{pgfonlayer}

}

\fi

} % close \foreach node \i in layer

} % close \foreach layer \N

\coordinate (-west) at (-\pgfkeysvalueof{/tikz/node size}/2,0); % name first layer

\foreach \i in {1,...,\lastN}{ % name nodes in last layer

\node[node,draw=none,fill=none] (-last-\i) at (-\lastlay-\i) {};

}

} % close code

}, % close pics

layer dist/.initial=1.5, % horizontal distance between layers

node dist/.initial=1.0, % vertical distance between nodes in same layers

node color/.initial=mylightblue, % horizontal distance between layers

node size/.initial=15pt, % size of nodes

}

\begin{document}

\begin{tikzpicture}[x=1cm,y=1cm,node dist=1.0]

\pic[node color=mylightblue] % top

(T) at (0,2) {network={2,4,4,3,3,4,2,1}};

\pic[node color=mylightred] % bottom

(B) at (0,-2) {network={2,4,4,3,3,4,4,1}};

\node[node=mylightgreen] (OUT) at (13,0) {};

\begin{pgfonlayer}{back} % draw on back

\draw[connect] (T-last-1) -- (OUT) -- (B-last-1);

\end{pgfonlayer}

\end{tikzpicture}

\end{document}

Examples

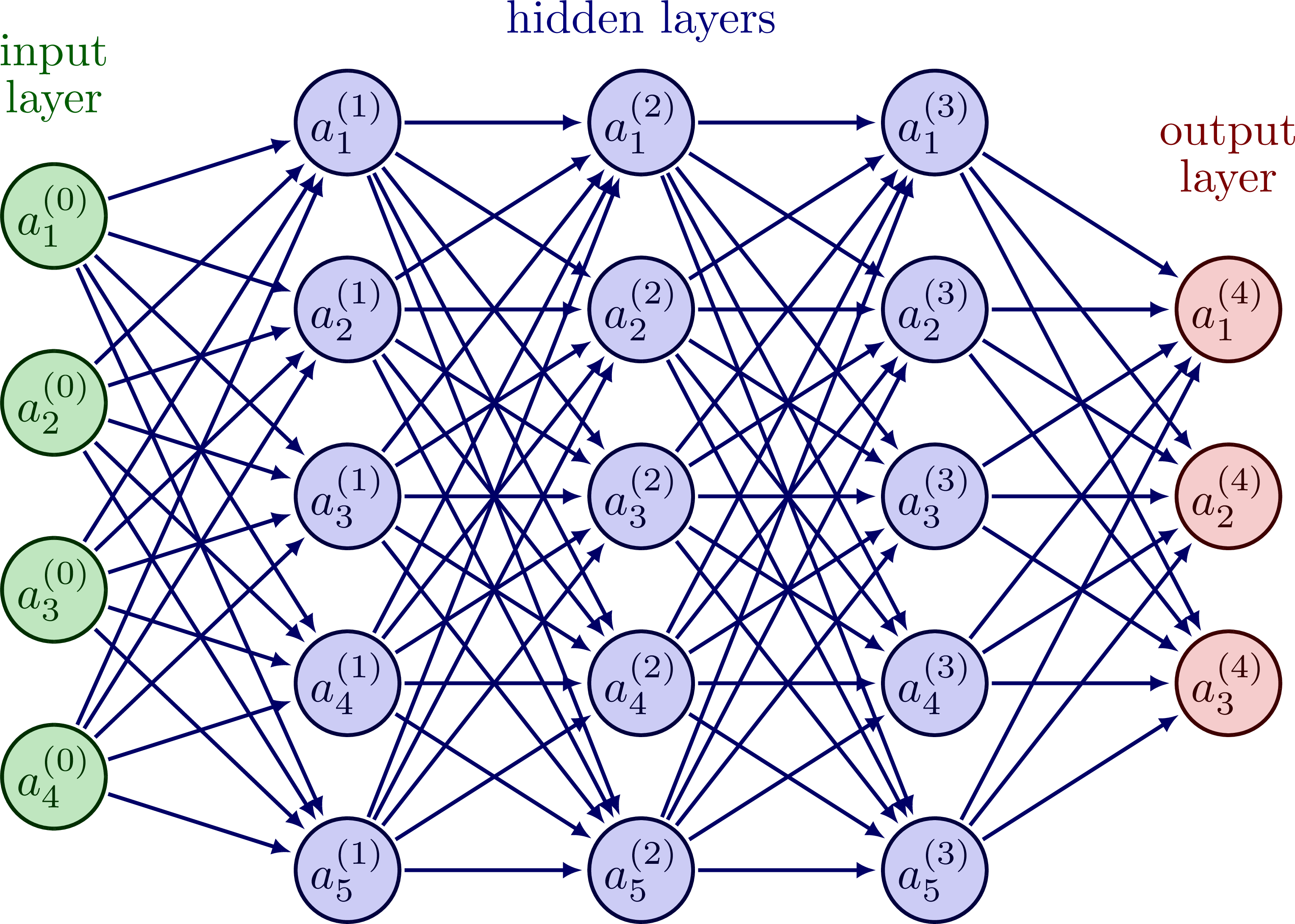

Connecting the nodes with arrows:

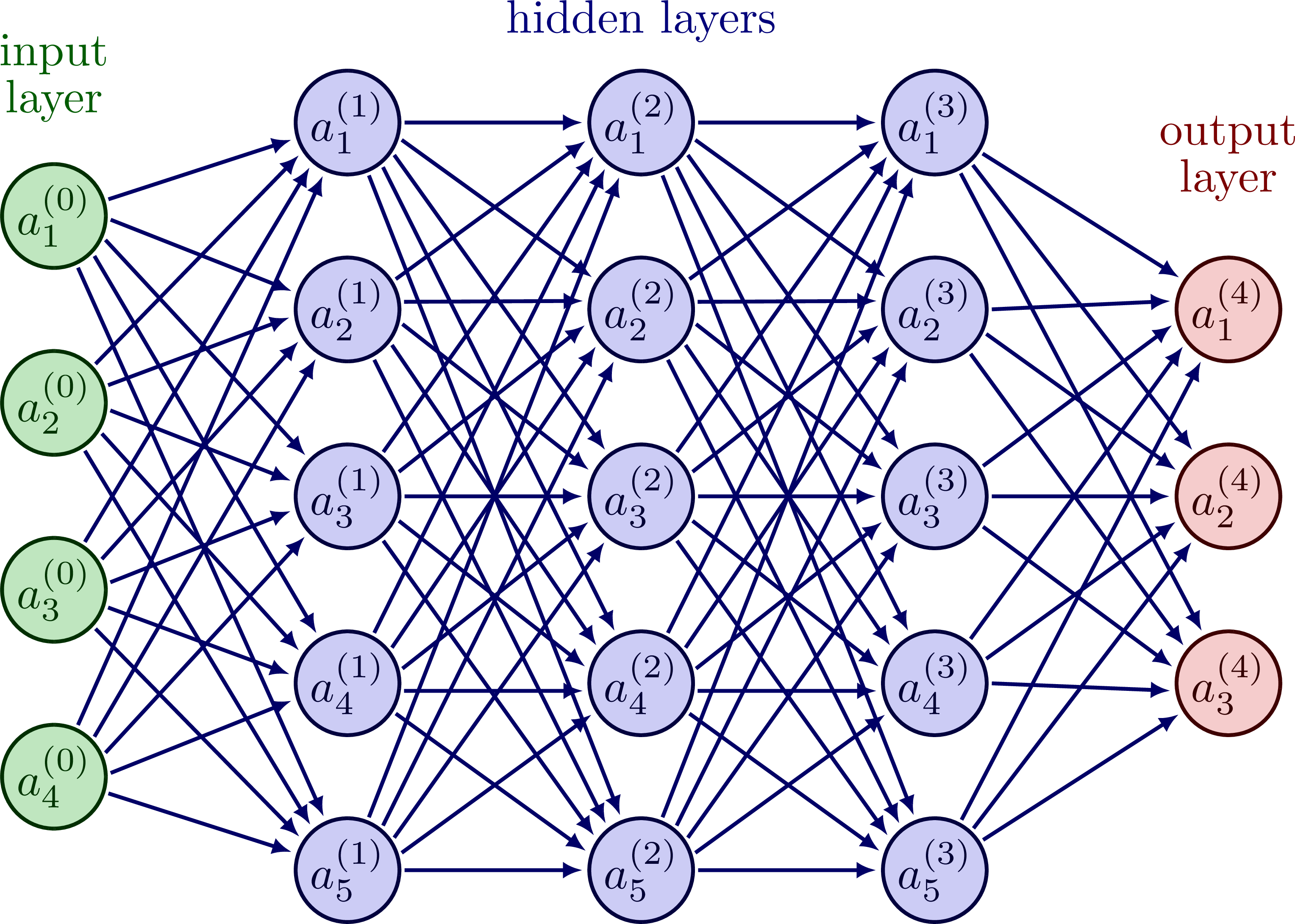

Distributing the arrows uniformly around the nodes:

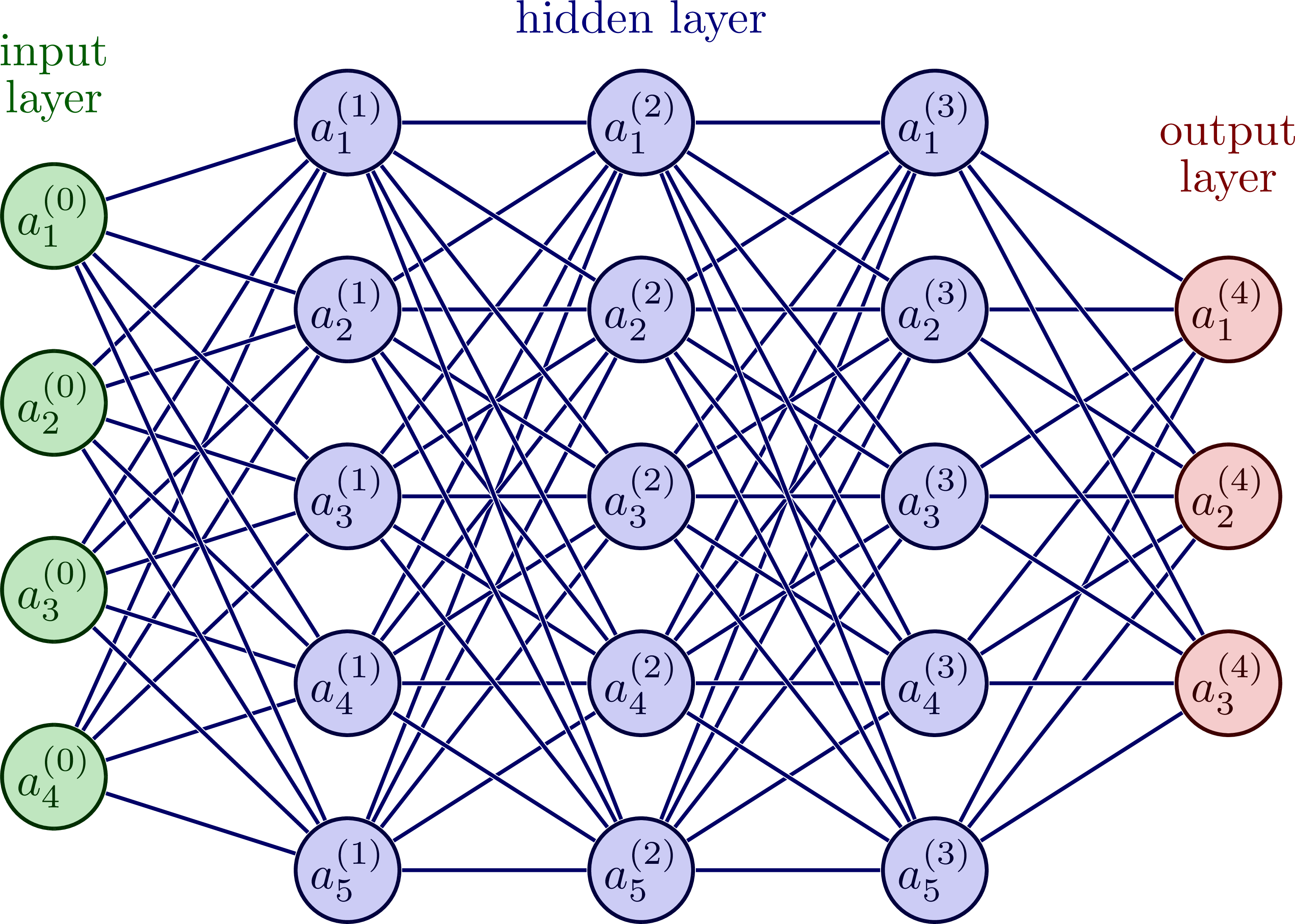

Connecting the nodes with just lines:

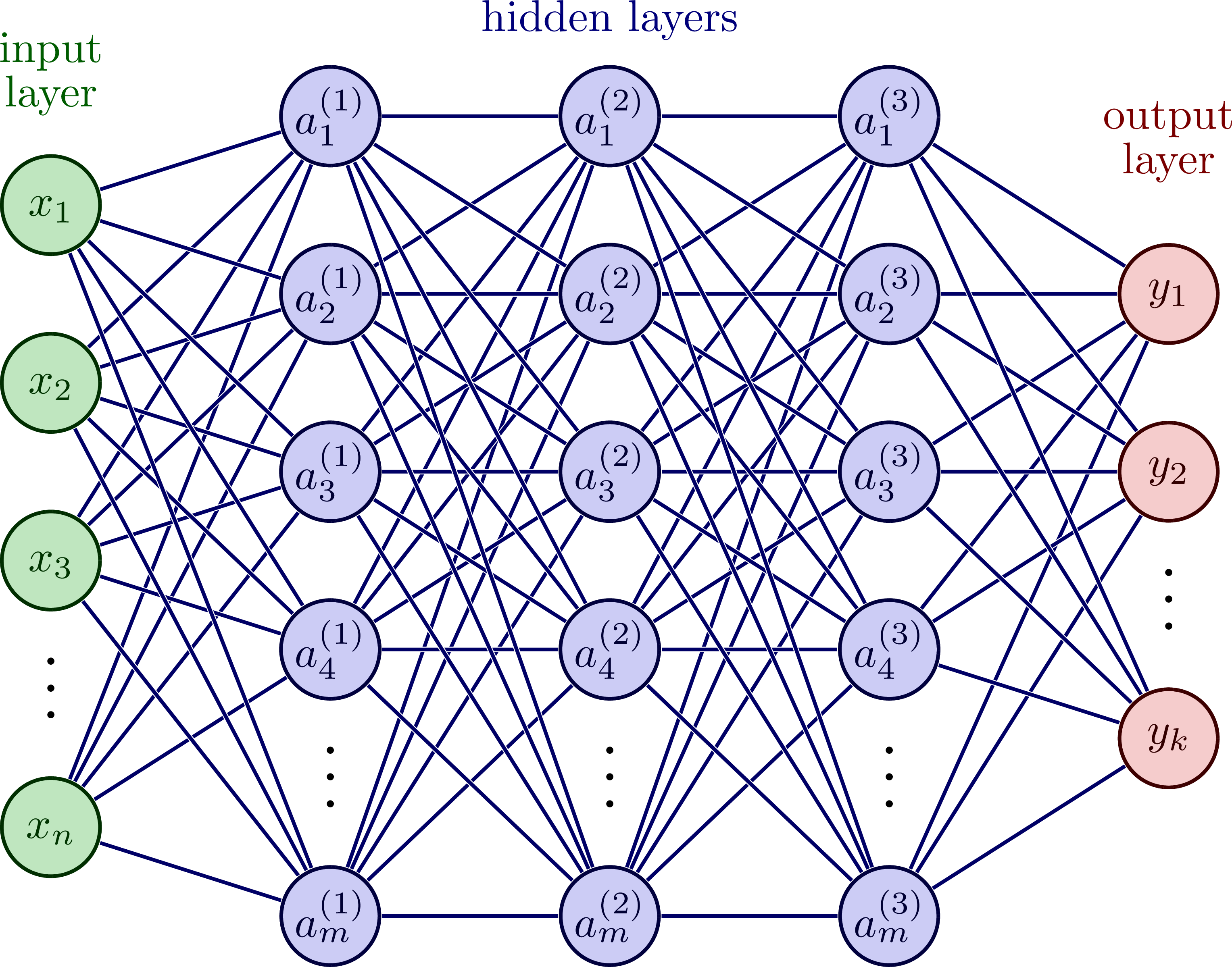

Inserting ellipses between the last two rows:

A very dense deep neural network:

A deep convolutional neural network (CNN):

An autoencoders (encoder+decoder):

Activation function in one neuron and one layer in matrix notation:

Full code

Edit and compile if you like:

% Author: Izaak Neutelings (September 2021)

% Inspiration:

% https://www.asimovinstitute.org/neural-network-zoo/

% https://www.youtube.com/watch?v=aircAruvnKk&list=PLZHQObOWTQDNU6R1_67000Dx_ZCJB-3pi&index=1

\documentclass[border=3pt,tikz]{standalone}

\usepackage{amsmath} % for aligned

\usepackage{listofitems} % for \readlist to create arrays

\usetikzlibrary{arrows.meta} % for arrow size

\usepackage[outline]{contour} % glow around text

\contourlength{1.4pt}

% COLORS

\usepackage{xcolor}

\colorlet{myred}{red!80!black}

\colorlet{myblue}{blue!80!black}

\colorlet{mygreen}{green!60!black}

\colorlet{myorange}{orange!70!red!60!black}

\colorlet{mydarkred}{red!30!black}

\colorlet{mydarkblue}{blue!40!black}

\colorlet{mydarkgreen}{green!30!black}

% STYLES

\tikzset{

>=latex, % for default LaTeX arrow head

node/.style={thick,circle,draw=myblue,minimum size=22,inner sep=0.5,outer sep=0.6},

node in/.style={node,green!20!black,draw=mygreen!30!black,fill=mygreen!25},

node hidden/.style={node,blue!20!black,draw=myblue!30!black,fill=myblue!20},

node convol/.style={node,orange!20!black,draw=myorange!30!black,fill=myorange!20},

node out/.style={node,red!20!black,draw=myred!30!black,fill=myred!20},

connect/.style={thick,mydarkblue}, %,line cap=round

connect arrow/.style={-{Latex[length=4,width=3.5]},thick,mydarkblue,shorten <=0.5,shorten >=1},

node 1/.style={node in}, % node styles, numbered for easy mapping with \nstyle

node 2/.style={node hidden},

node 3/.style={node out}

}

\def\nstyle{int(\lay<\Nnodlen?min(2,\lay):3)} % map layer number onto 1, 2, or 3

\begin{document}

% NEURAL NETWORK with coefficients, arrows

\begin{tikzpicture}[x=2.2cm,y=1.4cm]

\message{^^JNeural network with arrows}

\readlist\Nnod{4,5,5,5,3} % array of number of nodes per layer

\message{^^J Layer}

\foreachitem \N \in \Nnod{ % loop over layers

\edef\lay{\Ncnt} % alias of index of current layer

\message{\lay,}

\pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer

\foreach \i [evaluate={\y=\N/2-\i; \x=\lay; \n=\nstyle;}] in {1,...,\N}{ % loop over nodes

% NODES

\node[node \n] (N\lay-\i) at (\x,\y) {$a_\i^{(\prev)}$};

%\node[circle,inner sep=2] (N\lay-\i') at (\x-0.15,\y) {}; % shifted node

%\draw[node] (N\lay-\i) circle (\R);

% CONNECTIONS

\ifnum\lay>1 % connect to previous layer

\foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer

\draw[connect arrow] (N\prev-\j) -- (N\lay-\i); % connect arrows directly

%\draw[connect arrow] (N\prev-\j) -- (N\lay-\i'); % connect arrows to shifted node

}

\fi % else: nothing to connect first layer

}

}

% LABELS

\node[above=5,align=center,mygreen!60!black] at (N1-1.90) {input\\[-0.2em]layer};

\node[above=2,align=center,myblue!60!black] at (N3-1.90) {hidden layers};

\node[above=8,align=center,myred!60!black] at (N\Nnodlen-1.90) {output\\[-0.2em]layer};

\end{tikzpicture}

%% NEURAL NETWORK using \foreach's remember instead of \readlist

%\begin{tikzpicture}[x=2.2cm,y=1.4cm]

% \message{^^JNeural network with arrows}

% \def\Ntot{5} % total number of indices

% \def\nstyle{int(\lay<\Ntot?min(2,\lay):3)} % map layer number onto 1, 2, or 3

%

% \message{^^J Layer}

% \foreach \N [count=\lay,remember={\N as \Nprev (initially 0);}]

% in {4,5,5,5,3}{ % loop over layers

% \message{\lay,}

% \foreach \i [evaluate={\y=\N/2-\i; \x=\lay; \n=\nstyle; \prev=int(\lay-1);}]

% in {1,...,\N}{ % loop over nodes

% \node[node \n] (N\lay-\i) at (\x,\y) {$a_\i^{(\prev)}$};

%

% % CONNECTIONS

% \ifnum\Nprev>0 % connect to previous layer

% \foreach \j in {1,...,\Nprev}{ % loop over nodes in previous layer

% \draw[connect arrow] (N\prev-\j) -- (N\lay-\i); % connect arrows directly

% }

% \fi

%

% }

% }

%

% % LABELS

% \node[above=5,align=center,mygreen!60!black] at (N1-1.90) {input\\[-0.2em]layer};

% \node[above=2,align=center,myblue!60!black] at (N3-1.90) {hidden layers};

% \node[above=8,align=center,myred!60!black] at (N\Ntot-1.90) {output\\[-0.2em]layer};

%

%\end{tikzpicture}

% NEURAL NETWORK with coefficients, uniform arrows

\newcommand\setAngles[3]{

\pgfmathanglebetweenpoints{\pgfpointanchor{#2}{center}}{\pgfpointanchor{#1}{center}}

\pgfmathsetmacro\angmin{\pgfmathresult}

\pgfmathanglebetweenpoints{\pgfpointanchor{#2}{center}}{\pgfpointanchor{#3}{center}}

\pgfmathsetmacro\angmax{\pgfmathresult}

\pgfmathsetmacro\dang{\angmax-\angmin}

\pgfmathsetmacro\dang{\dang<0?\dang+360:\dang}

}

\begin{tikzpicture}[x=2.2cm,y=1.4cm]

\message{^^JNeural network with uniform arrows}

\readlist\Nnod{4,5,5,5,3} % array of number of nodes per layer

\foreachitem \N \in \Nnod{ % loop over layers

\def\lay{\Ncnt} % alias of index of current layer

\pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer

\message{^^J Layer \lay, N=\N, prev=\prev ->}

% NODES

\foreach \i [evaluate={\y=\N/2-\i; \x=\lay; \n=\nstyle;}] in {1,...,\N}{ % loop over nodes

\message{N\lay-\i, }

\node[node \n] (N\lay-\i) at (\x,\y) {$a_\i^{(\prev)}$};

}

% CONNECTIONS

\foreach \i in {1,...,\N}{ % loop over nodes

\ifnum\lay>1 % connect to previous layer

\setAngles{N\prev-1}{N\lay-\i}{N\prev-\Nnod[\prev]} % angles in current node

%\draw[red,thick] (N\lay-\i)++(\angmin:0.2) --++ (\angmin:-0.5) node[right,scale=0.5] {\dang};

%\draw[blue,thick] (N\lay-\i)++(\angmax:0.2) --++ (\angmax:-0.5) node[right,scale=0.5] {\angmin, \angmax};

\foreach \j [evaluate={\ang=\angmin+\dang*(\j-1)/(\Nnod[\prev]-1);}] %-180+(\angmax-\angmin)*\j/\Nnod[\prev]

in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer

\setAngles{N\lay-1}{N\prev-\j}{N\lay-\N} % angles out from previous node

\pgfmathsetmacro\angout{\angmin+(\dang-360)*(\i-1)/(\N-1)} % number of previous layer

%\draw[connect arrow,white,line width=1.1] (N\prev-\j.{\angout}) -- (N\lay-\i.{\ang});

\draw[connect arrow] (N\prev-\j.{\angout}) -- (N\lay-\i.{\ang}); % connect arrows uniformly

}

\fi % else: nothing to connect first layer

}

}

% LABELS

\node[above=5,align=center,mygreen!60!black] at (N1-1.90) {input\\[-0.2em]layer};

\node[above=2,align=center,myblue!60!black] at (N3-1.90) {hidden layers};

\node[above=8,align=center,myred!60!black] at (N\Nnodlen-1.90) {output\\[-0.2em]layer};

\end{tikzpicture}

% NEURAL NETWORK with coefficients, no arrows

\begin{tikzpicture}[x=2.2cm,y=1.4cm]

\message{^^JNeural network without arrows}

\readlist\Nnod{4,5,5,5,3} % array of number of nodes per layer

\message{^^J Layer}

\foreachitem \N \in \Nnod{ % loop over layers

\def\lay{\Ncnt} % alias of index of current layer

\pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer

\message{\lay,}

\foreach \i [evaluate={\y=\N/2-\i; \x=\lay; \n=\nstyle;}] in {1,...,\N}{ % loop over nodes

% NODES

\node[node \n] (N\lay-\i) at (\x,\y) {$a_\i^{(\prev)}$};

% CONNECTIONS

\ifnum\lay>1 % connect to previous layer

\foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer

\draw[connect,white,line width=1.2] (N\prev-\j) -- (N\lay-\i);

\draw[connect] (N\prev-\j) -- (N\lay-\i);

%\draw[connect] (N\prev-\j.0) -- (N\lay-\i.180); % connect to left

}

\fi % else: nothing to connect first layer

}

}

% LABELS

\node[above=5,align=center,mygreen!60!black] at (N1-1.90) {input\\[-0.2em]layer};

\node[above=2,align=center,myblue!60!black] at (N3-1.90) {hidden layer};

\node[above=8,align=center,myred!60!black] at (N\Nnodlen-1.90) {output\\[-0.2em]layer};

\end{tikzpicture}

% NEURAL NETWORK with coefficients, shifted

\begin{tikzpicture}[x=2.2cm,y=1.4cm]

\message{^^JNeural network, shifted}

\readlist\Nnod{4,5,5,5,3} % array of number of nodes per layer

\readlist\Nstr{n,m,m,m,k} % array of string number of nodes per layer

\readlist\Cstr{\strut x,a^{(\prev)},a^{(\prev)},a^{(\prev)},y} % array of coefficient symbol per layer

\def\yshift{0.5} % shift last node for dots

\message{^^J Layer}

\foreachitem \N \in \Nnod{ % loop over layers

\def\lay{\Ncnt} % alias of index of current layer

\pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer

\message{\lay,}

\foreach \i [evaluate={\c=int(\i==\N); \y=\N/2-\i-\c*\yshift;

\index=(\i<\N?int(\i):"\Nstr[\lay]");

\x=\lay; \n=\nstyle;}] in {1,...,\N}{ % loop over nodes

% NODES

\node[node \n] (N\lay-\i) at (\x,\y) {$\Cstr[\lay]_{\index}$};

% CONNECTIONS

\ifnum\lay>1 % connect to previous layer

\foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer

\draw[connect,white,line width=1.2] (N\prev-\j) -- (N\lay-\i);

\draw[connect] (N\prev-\j) -- (N\lay-\i);

%\draw[connect] (N\prev-\j.0) -- (N\lay-\i.180); % connect to left

}

\fi % else: nothing to connect first layer

}

\path (N\lay-\N) --++ (0,1+\yshift) node[midway,scale=1.5] {$\vdots$};

}

% LABELS

\node[above=5,align=center,mygreen!60!black] at (N1-1.90) {input\\[-0.2em]layer};

\node[above=2,align=center,myblue!60!black] at (N3-1.90) {hidden layers};

\node[above=10,align=center,myred!60!black] at (N\Nnodlen-1.90) {output\\[-0.2em]layer};

\end{tikzpicture}

% NEURAL NETWORK no text

\begin{tikzpicture}[x=2.2cm,y=1.4cm]

\message{^^JNeural network without text}

\readlist\Nnod{4,5,5,5,3} % array of number of nodes per layer

\message{^^J Layer}

\foreachitem \N \in \Nnod{ % loop over layers

\def\lay{\Ncnt} % alias of index of current layer

\pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer

\message{\lay,}

\foreach \i [evaluate={\y=\N/2-\i; \x=\lay; \n=\nstyle;}] in {1,...,\N}{ % loop over nodes

% NODES

\node[node \n] (N\lay-\i) at (\x,\y) {};

% CONNECTIONS

\ifnum\lay>1 % connect to previous layer

\foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer

\draw[connect,white,line width=1.2] (N\prev-\j) -- (N\lay-\i);

\draw[connect] (N\prev-\j) -- (N\lay-\i);

%\draw[connect] (N\prev-\j.0) -- (N\lay-\i.180); % connect to left

}

\fi % else: nothing to connect first layer

}

}

% LABELS

\node[above=5,align=center,mygreen!60!black] at (N1-1.90) {input\\[-0.2em]layer};

\node[above=2,align=center,myblue!60!black] at (N3-1.90) {hidden layer};

\node[above=10,align=center,myred!60!black] at (N\Nnodlen-1.90) {output\\[-0.2em]layer};

\end{tikzpicture}

% NEURAL NETWORK no text - large

\begin{tikzpicture}[x=2.3cm,y=1.0cm]

\message{^^JNeural network large}

\readlist\Nnod{6,7,7,7,7,7,4} % array of number of nodes per layer

\message{^^J Layer}

\foreachitem \N \in \Nnod{ % loop over layers

\def\lay{\Ncnt} % alias of index of current layer

\pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer

\message{\lay,}

\foreach \i [evaluate={\y=\N/2-\i; \x=\lay; \n=\nstyle;

\nprev=int(\prev<\Nnodlen?min(2,\prev):3);}] in {1,...,\N}{ % loop over nodes

% NODES

%\node[node \n,outer sep=0.6,minimum size=18] (N\lay-\i) at (\x,\y) {};

\coordinate (N\lay-\i) at (\x,\y);

% CONNECTIONS

\ifnum\lay>1 % connect to previous layer

\foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer

\draw[connect,white,line width=1.2] (N\prev-\j) -- (N\lay-\i);

\draw[connect] (N\prev-\j) -- (N\lay-\i);

%\draw[connect] (N\prev-\j.0) -- (N\lay-\i.180); % connect to left

\node[node \nprev,minimum size=18] at (N\prev-\j) {}; % draw node over lines

}

\ifnum \lay=\Nnodlen % draw last node over lines

\node[node \n,minimum size=18] at (N\lay-\i) {};

\fi

\fi % else: nothing to connect first layer

}

}

\end{tikzpicture}

% DEEP CONVOLUTIONAL NEURAL NETWORK

\begin{tikzpicture}[x=1.6cm,y=1.1cm]

\large

\message{^^JDeep convolution neural network}

\readlist\Nnod{5,5,4,3,2,4,4,3} % array of number of nodes per layer

\def\NC{6} % number of convolutional layers

\def\nstyle{int(\lay<\Nnodlen?(\lay<\NC?min(2,\lay):3):4)} % map layer number on 1, 2, or 3

\tikzset{ % node styles, numbered for easy mapping with \nstyle

node 1/.style={node in},

node 2/.style={node convol},

node 3/.style={node hidden},

node 4/.style={node out},

}

% TRAPEZIA

\draw[myorange!40,fill=myorange,fill opacity=0.02,rounded corners=2]

%(1.6,-2.5) rectangle (4.4,2.5);

(1.6,-2.7) --++ (0,5.4) --++ (3.8,-1.9) --++ (0,-1.6) -- cycle;

\draw[myblue!40,fill=myblue,fill opacity=0.02,rounded corners=2]

(5.6,-2.0) rectangle++ (1.8,4.0);

\node[right=19,above=3,align=center,myorange!60!black] at (3.1,1.8) {convolutional\\[-0.2em]layers};

\node[above=3,align=center,myblue!60!black] at (6.5,1.9) {fully-connected\\[-0.2em]hidden layers};

\message{^^J Layer}

\foreachitem \N \in \Nnod{ % loop over layers

\def\lay{\Ncnt} % alias of index of current layer

\pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer

%\pgfmathsetmacro\Nprev{\Nnod[\prev]} % array of number of nodes in previous layer

\message{\lay,}

\foreach \i [evaluate={\y=\N/2-\i+0.5; \x=\lay; \n=\nstyle;}] in {1,...,\N}{ % loop over nodes

%\message{^^J Layer \lay, node \i}

% NODES

\node[node \n,outer sep=0.6] (N\lay-\i) at (\x,\y) {};

% CONNECTIONS

\ifnum\lay>1 % connect to previous layer

\ifnum\lay<\NC % convolutional layers

\foreach \j [evaluate={\jprev=int(\i-\j); \cconv=int(\Nnod[\prev]>\N); \ctwo=(\cconv&&\j>0);

\c=int((\jprev<1||\jprev>\Nnod[\prev]||\ctwo)?0:1);}]

in {-1,0,1}{

\ifnum\c=1

\ifnum\cconv=0

\draw[connect,white,line width=1.2] (N\prev-\jprev) -- (N\lay-\i);

\fi

\draw[connect] (N\prev-\jprev) -- (N\lay-\i);

\fi

}

\else % fully connected layers

\foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer

\draw[connect,white,line width=1.2] (N\prev-\j) -- (N\lay-\i);

\draw[connect] (N\prev-\j) -- (N\lay-\i);

}

\fi

\fi % else: nothing to connect first layer

}

}

% LABELS

\node[above=3,align=center,mygreen!60!black] at (N1-1.90) {input\\[-0.2em]layer};

\node[above=3,align=center,myred!60!black] at (N\Nnodlen-1.90) {output\\[-0.2em]layer};

\end{tikzpicture}

% AUTOENCODER

\begin{tikzpicture}[x=2.1cm,y=1.2cm]

\large

\message{^^JNeural network without arrows}

\readlist\Nnod{6,5,4,3,4,5,6} % array of number of nodes per layer

% TRAPEZIA

\node[above,align=center,myorange!60!black] at (3,2.4) {encoder};

\node[above,align=center,myblue!60!black] at (5,2.4) {decoder};

\draw[myorange!40,fill=myorange,fill opacity=0.02,rounded corners=2]

(1.6,-2.7) --++ (0,5.4) --++ (2.8,-1.2) --++ (0,-3) -- cycle;

\draw[myblue!40,fill=myblue,fill opacity=0.02,rounded corners=2]

(6.4,-2.7) --++ (0,5.4) --++ (-2.8,-1.2) --++ (0,-3) -- cycle;

\message{^^J Layer}

\foreachitem \N \in \Nnod{ % loop over layers

\def\lay{\Ncnt} % alias of index of current layer

\pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer

\message{\lay,}

\foreach \i [evaluate={\y=\N/2-\i+0.5; \x=\lay; \n=\nstyle;}] in {1,...,\N}{ % loop over nodes

% NODES

\node[node \n,outer sep=0.6] (N\lay-\i) at (\x,\y) {};

% CONNECTIONS

\ifnum\lay>1 % connect to previous layer

\foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer

\draw[connect,white,line width=1.2] (N\prev-\j) -- (N\lay-\i);

\draw[connect] (N\prev-\j) -- (N\lay-\i);

%\draw[connect] (N\prev-\j.0) -- (N\lay-\i.180); % connect to left

}

\fi % else: nothing to connect first layer

}

}

% LABELS

\node[above=2,align=center,mygreen!60!black] at (N1-1.90) {input};

\node[above=2,align=center,myred!60!black] at (N\Nnodlen-1.90) {output};

\end{tikzpicture}

% NEURAL NETWORK activation

% https://www.youtube.com/watch?v=aircAruvnKk&list=PLZHQObOWTQDNU6R1_67000Dx_ZCJB-3pi&index=1

\begin{tikzpicture}[x=2.7cm,y=1.6cm]

\message{^^JNeural network activation}

\def\NI{5} % number of nodes in input layers

\def\NO{4} % number of nodes in output layers

\def\yshift{0.4} % shift last node for dots

% INPUT LAYER

\foreach \i [evaluate={\c=int(\i==\NI); \y=\NI/2-\i-\c*\yshift; \index=(\i<\NI?int(\i):"n");}]

in {1,...,\NI}{ % loop over nodes

\node[node in,outer sep=0.6] (NI-\i) at (0,\y) {$a_{\index}^{(0)}$};

}

% OUTPUT LAYER

\foreach \i [evaluate={\c=int(\i==\NO); \y=\NO/2-\i-\c*\yshift; \index=(\i<\NO?int(\i):"m");}]

in {\NO,...,1}{ % loop over nodes

\ifnum\i=1 % high-lighted node

\node[node hidden]

(NO-\i) at (1,\y) {$a_{\index}^{(1)}$};

\foreach \j [evaluate={\index=(\j<\NI?int(\j):"n");}] in {1,...,\NI}{ % loop over nodes in previous layer

\draw[connect,white,line width=1.2] (NI-\j) -- (NO-\i);

\draw[connect] (NI-\j) -- (NO-\i)

node[pos=0.50] {\contour{white}{$w_{1,\index}$}};

}

\else % other light-colored nodes

\node[node,blue!20!black!80,draw=myblue!20,fill=myblue!5]

(NO-\i) at (1,\y) {$a_{\index}^{(1)}$};

\foreach \j in {1,...,\NI}{ % loop over nodes in previous layer

%\draw[connect,white,line width=1.2] (NI-\j) -- (NO-\i);

\draw[connect,myblue!20] (NI-\j) -- (NO-\i);

}

\fi

}

% DOTS

\path (NI-\NI) --++ (0,1+\yshift) node[midway,scale=1.2] {$\vdots$};

\path (NO-\NO) --++ (0,1+\yshift) node[midway,scale=1.2] {$\vdots$};

% EQUATIONS

\def\agr#1{{\color{mydarkgreen}a_{#1}^{(0)}}} % green a_i^j

\node[below=16,right=11,mydarkblue,scale=0.95] at (NO-1)

{$\begin{aligned} %\underset{\text{bias}}{b_1}

&= \color{mydarkred}\sigma\left( \color{black}

w_{1,1}\agr{1} + w_{1,2}\agr{2} + \ldots + w_{1,n}\agr{n} + b_1^{(0)}

\color{mydarkred}\right)\\

&= \color{mydarkred}\sigma\left( \color{black}

\sum_{i=1}^{n} w_{1,i}\agr{i} + b_1^{(0)}

\color{mydarkred}\right)

\end{aligned}$};

\node[right,scale=0.9] at (1.3,-1.3)

{$\begin{aligned}

{\color{mydarkblue}

\begin{pmatrix}

a_{1}^{(1)} \\[0.3em]

a_{2}^{(1)} \\

\vdots \\

a_{m}^{(1)}

\end{pmatrix}}

&=

\color{mydarkred}\sigma\left[ \color{black}

\begin{pmatrix}

w_{1,1} & w_{1,2} & \ldots & w_{1,n} \\

w_{2,1} & w_{2,2} & \ldots & w_{2,n} \\

\vdots & \vdots & \ddots & \vdots \\

w_{m,1} & w_{m,2} & \ldots & w_{m,n}

\end{pmatrix}

{\color{mydarkgreen}

\begin{pmatrix}

a_{1}^{(0)} \\[0.3em]

a_{2}^{(0)} \\

\vdots \\

a_{n}^{(0)}

\end{pmatrix}}

+

\begin{pmatrix}

b_{1}^{(0)} \\[0.3em]

b_{2}^{(0)} \\

\vdots \\

b_{m}^{(0)}

\end{pmatrix}

\color{mydarkred}\right]\\[0.5em]

{\color{mydarkblue}\mathbf{a}^{(1)}} % vector (bold)

&= \color{mydarkred}\sigma\left( \color{black}

\mathbf{W}^{(0)} {\color{mydarkgreen}\mathbf{a}^{(0)}}+\mathbf{b}^{(0)}

\color{mydarkred}\right)

\end{aligned}$};

\end{tikzpicture}

\end{document}Click to download: neural_networks.tex • neural_networks.pdf

Open in Overleaf: neural_networks.tex

Hi! This is great, I am using a variation of the basic graph for a presentation! (Shall I cite you? How?)

I have a question. What if I want to additional nodes and “connecting arrows” (corresponding to external variables) somewhere in the model? Specifically: I have

\readlist\Nnod{4,5,3,1,2}

and I want two extra nodes, one above and one below the only node in the fourth layer (the last hidden weight). I can draw the nodes and set their style but I cannot place them in an exact symmetrical position nor can I connect them with arrows to (N4-1.90), because I do not know how exactly to determine their position.

Hi Paolo,

Thanks for the question. You may cite me or this web page according to the Creative Commons Attribution-ShareAlike 4.0 International License. If you adapt it with only small changes you could write something like “Adapted from …”.

I am not completely sure I understand what you want. How do you want to connect the extra nodes? Only to the output layer, like this?

\begin{tikzpicture}[x=2.2cm,y=1.4cm, node ext/.style={node,purple!20!black,draw=purple!30!black,fill=purple!20} ] \message{^^JNeural network without arrows} \readlist\Nnod{4,5,3,1,2} % array of number of nodes per layer \message{^^J Layer} \foreachitem \N \in \Nnod{ % loop over layers \def\lay{\Ncnt} % alias of index of current layer \pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer \message{\lay,} \foreach \i [evaluate={\y=\N/2-\i; \x=\lay; \n=\nstyle;}] in {1,...,\N}{ % loop over nodes % NODES \node[node \n] (N\lay-\i) at (\x,\y) {$a_\i^{(\prev)}$}; % CONNECTIONS \ifnum\lay>1 % connect to previous layer \foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer \draw[connect,white,line width=1.2] (N\prev-\j) -- (N\lay-\i); \draw[connect] (N\prev-\j) -- (N\lay-\i); %\draw[connect] (N\prev-\j.0) -- (N\lay-\i.180); % connect to left } \fi % else: nothing to connect first layer } } % EXTRA NODES IN LAYER 4 \node[node ext] (E1) at (4,0.5) {$c_1$}; \node[node ext] (E2) at (4,-1.5) {$c_2$}; %\draw[connect] (E1) -- (N4-1); % connect to layer 4 %\draw[connect] (E2) -- (N4-1); % connect to layer 4 \draw[connect] (E1) -- (N5-1); % connect to layer 5 \draw[connect] (E1) -- (N5-2); % connect to layer 5 \draw[connect] (E2) -- (N5-1); % connect to layer 5 \draw[connect] (E2) -- (N5-2); % connect to layer 5 \end{tikzpicture}Cheers,

Izaak

Yes, that was it! My code actually adds this bit (keep in mind that the second-to-last layer only has one node):

% EXTERNAL VARIABLES

\node[above=30,external] (S1) at (N4-1) {$S_{i}^{(1)}$};

\node[below=30,external] (S2) at (N4-1) {$S_{i}^{(2)}$};

\foreach \k in {1,\Nnod[\Nnodlen]}{

\draw[connect arrow] (S1) — (N\Nnodlen-\k);

\draw[connect arrow] (S2) — (N\Nnodlen-\k);

}

This works for me, even though I am not happy with “above=30” and “below=30”. Sorry if I can’t embed this in LaTeX code that can be run on the spot. Anyway, thanks again, also for the advice on citing you.

Great posting. so helpful! thanks!

Thank you! Glad it’s helpful!

This is a great post, But I have one question I need these two code [ Activation function in one neuron and one layer in matrix notation, and Distributing the arrows uniformly around the nodes ), so I can modify some of the lines and make something is need for my own research.

Hi Zen, thank you! All TikZ code published on this website is available under the Attribution-ShareAlike 4.0 International license. Feel free to share, copy, adapt, etc. If you do not make large modifications to the original, some form of attribution is appreciated: “Adapted from [reference].”, “By courtesy of Izaak Neutelings.”, or something similar.

Izaak

Thank you for sharing your work. Can I use this source code?

Hi Chi, sure! All TikZ code published on this website is available under the Attribution-ShareAlike 4.0 International license. No need to ask for explicit permission.

Izaak

Hey, thanks for the awesome post! Is it possible to change the thickness of the node circle ends? And the distance between node layers? Is it a matter of changing the for loop?

Hi Bhargav,

To change the distance between layers, simply modify the x coordinate scale via

[x=4cm,y=1.4cm]at the beginning of thetikzpictureenvironment.What do you mean by “node circle ends”? Do you mean just the nodes in the final output layer? To change the style like line thickness of a node, just use the basics explain in this section of the TikZ manual.

For example, changing

x=2.2cmtox=4cm, and changingthicktoultra thickin the definition of themynodestyle for the first code snippet on this post:\documentclass[border=3pt,tikz]{standalone} \usepackage{tikz} \usepackage{listofitems} % for \readlist to create arrays \tikzstyle{mynode}=[ultra thick,draw=blue,fill=blue!20,circle,minimum size=22] \begin{document} \begin{tikzpicture}[x=4cm,y=1.4cm] \readlist\Nnod{4,5,5,5,3} % number of nodes per layer % \Nnodlen = length of \Nnod (i.e. total number of layers) % \Nnod[1] = element (number of nodes) at index 1 \foreachitem \N \in \Nnod{ % loop over layers % \N = current element in this iteration (i.e. number of nodes for this layer) % \Ncnt = index of current layer in this iteration \foreach \i [evaluate={\x=\Ncnt; \y=\N/2-\i+0.5; \prev=int(\Ncnt-1);}] in {1,...,\N}{ % loop over nodes \node[mynode] (N\Ncnt-\i) at (\x,\y) {}; \ifnum\Ncnt>1 % connect to previous layer \foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer \draw[thick] (N\prev-\j) -- (N\Ncnt-\i); % connect arrows directly } \fi % else: nothing to connect first layer } } \end{tikzpicture} \end{document}If you only want to change the style of the output layer nodes in the full code, simply specify your desired line thickness to the definition of the

node outstyle (overriding the line thickness defined for thenodestyle):\tikzstyle{node}=[thick,circle,draw=myblue,minimum size=22,inner sep=0.5,outer sep=0.6] \tikzstyle{node in}=[node,green!20!black,draw=mygreen!30!black,fill=mygreen!25] \tikzstyle{node hidden}=[node,blue!20!black,draw=myblue!30!black,fill=myblue!20] \tikzstyle{node convol}=[node,orange!20!black,draw=myorange!30!black,fill=myorange!20] \tikzstyle{node out}=[node,ultra thick,red!20!black,draw=myred!30!black,fill=myred!20] % added "ultra thick"Cheers,

Izaak

Hi Izaak,

Impressed with your work! I’m looking to adapt your code for a Hemisphere Neural Network to a 5-hemisphere structure, each with 2 regressors and 3 hidden layers of 4 neurons (similar to Figure 1 in [https://arxiv.org/pdf/2202.04146.pdf]). Any tips or suggestions?

Thanks in advance!

Hi Firmino,

Thanks!

You could do it with either a couple of scopes (i.e., the

scopeenvironment) to vertically shift the networks, or you can define a more general macro for the networks that can be vertically shifted, using either \def or \pic.Below is an example with \pic that also may be useful to others.

% Author: Izaak Neutelings (November 2023) % Inspiration: % https://tikz.net/neural_networks/#comment-3299 % https://arxiv.org/pdf/2202.04146.pdf \documentclass[border=3pt,tikz]{standalone} \usetikzlibrary{calc} \usepackage{xcolor} % LAYERS \pgfdeclarelayer{back} % to draw on background \pgfsetlayers{back,main} % set order % COLORS \colorlet{mylightred}{red!80!black!60} \colorlet{mylightblue}{blue!80!black!60} \colorlet{mylightgreen}{green!80!black!60} \colorlet{mylightorange}{orange!95!black!60} % STYLES \tikzset{ % node styles, numbered for easy mapping with \nstyle >=latex, % for default LaTeX arrow head node/.style={thin,circle,draw=#1!70!black,fill=#1, minimum size=\pgfkeysvalueof{/tikz/node size},inner sep=0.5,outer sep=0}, node/.default=mylightblue, % default color for node style connect/.style={thick,blue!20!black!35}, %,line cap=round } % MACROS \def\lastlay{1} % index of last layer \def\lastN{1} % number of nodes in last layer \tikzset{ pics/network/.style={% code={% \foreach \N [count=\lay,remember={\N as \Nprev (initially 0);}] in {#1}{ % loop over layers \xdef\lastlay{\lay} % store for after loop \xdef\lastN{\N} % store for after loop \foreach \i [evaluate={% \y=\pgfkeysvalueof{/tikz/node dist}*(\N/2-\i+0.5); \x=\pgfkeysvalueof{/tikz/layer dist}*(\lay-1); \prev=int(\lay-1); }% ] in {1,...,\N}{ % loop over nodes \node[node=\pgfkeysvalueof{/tikz/node color}] (-\lay-\i) at (\x,\y) {}; \ifnum\Nprev>0 % connect to previous layer \foreach \j in {1,...,\Nprev}{ % loop over nodes in previous layer \begin{pgfonlayer}{back} % draw on back \draw[connect] (-\prev-\j) -- (-\lay-\i); \end{pgfonlayer} } \fi } % close \foreach node \i in layer } % close \foreach layer \N \coordinate (-west) at (-\pgfkeysvalueof{/tikz/node size}/2,0); % name first layer \foreach \i in {1,...,\lastN}{ % name nodes in last layer \node[node,draw=none,fill=none] (-last-\i) at (-\lastlay-\i) {}; } } % close code }, % close pics layer dist/.initial=1.5, % horizontal distance between layers node dist/.initial=1.0, % vertical distance between nodes in same layers node color/.initial=mylightblue, % horizontal distance between layers node size/.initial=15pt, % size of nodes } \begin{document} % NEURAL NETWORK: hemispheres \begin{tikzpicture}[x=1cm,y=1cm,node dist=0.8] % NETWORKS \pic[node color=mylightorange] % top (T) at (0,4.2*\pgfkeysvalueof{/tikz/node dist}) {network={2,4,4,4,1}}; \pic[node color=mylightred] % bottom (B) at (0,-4.2*\pgfkeysvalueof{/tikz/node dist}) {network={2,4,4,4,1}}; \pic[node color=mylightblue] % middle (M) at (0,0) {network={2,4,4,4,1}}; % OUTPUT NODE \node[node=mylightgreen] (OUT) at ($(M-last-1)+(3,0)$) {}; %({1.5*(\lastlay+2)},0) {}; \begin{pgfonlayer}{back} % draw on back \draw[connect] % draw connections (T-last-1) -- (OUT) (M-last-1) -- (OUT) (B-last-1) -- (OUT); \end{pgfonlayer}{back} % TEXT \node[left=0pt] at (T-west) {$X_{t,g\in\mathcal{H}_1}$}; \node[left=0pt] at (M-west) {$X_{t,g\in\mathcal{H}_2}$}; \node[left=0pt] at (B-west) {$X_{t,g\in\mathcal{H}_3}$}; \node[anchor=105] at (T-last-1.south) {$h_{t,1}$}; \node[anchor=105] at (M-last-1.south) {$h_{t,2}$}; \node[anchor=105] at (B-last-1.south) {$h_{t,3}$}; \node[right=2pt] at (OUT.east) {$\displaystyle\hat{y} = \sum^3_{i=0}h_{t,i}$}; \end{tikzpicture} \end{document}Cheers,

Izaak

Hi Izaak,

This is awesome! I am using the “Activation function in one neuron and one layer in matrix notation” image for the cover page of my lecture notes for a class called “Quantitative Foundations of Artificial Intelligence”.

Just a minor remark: The formulas displayed contain a slight mistake. If you look carefully, in some places you reference an input a_{0}^{(0)}, which does not exist. Similarly, all the weights w_{i, 0} (referring to the weight applied to a_{0}^{(0)} in the computation of a_{i}^{(1)}) do not exist either. Finally, including these non-existing weights leads to the fact that your matrix W^{(0)} has n+1 columns whereas the column vector a^{(0)} only has n rows (since you’re correctly ignoring the non-existent a_{0}^{(0)} here) in the matrix notation, which clearly doesn’t work. Similarly, the first and second expression for the computation of a_{1}^{(1)} do not match. Hope this helps!

Cheers,

Marvin

Hi Marvin,

Thank you for the corrections! I appreciate it. Unfortunately, it took two years before someone informed me of this typo. 😅

It should be fixed now.

Cheers,

Izaak

Excellent examples! Could you showcase ellipsis example for vertical nodes? And could there be a functionality to also easily flip the direction (top-bottom) (left-right)?

Hi Nurech,

I am not 100% sure what you are asking: Do you mean horizontal ellipses between two (vertical) layers, or vertical ellipses between nodes in the same (vertical) layer as shown in the examples above?

What would you like to flip the direction of? Just the ellipses, or rather the layers?

Cheers,

Izaak

Hi, thanks a lot for your examples, they are of invaluable help. I’ve noticed that if I delete the first example (“% NEURAL NETWORK with coefficients, arrows), then the code crashes. I was not able to figure out what caused this, do you have any idea?

I get a few errors that say: “Package pgf Error: No shape named `N2-5′ is known.”

Thanks for your attention!

Hi C,

It is because the nodes need to be defined before calculating the angles of the arrows. This was not done correctly in the “

NEURAL NETWORK with coefficients, uniform arrows” picture.The following should fix it by splitting the loop to first define all nodes before calculating the uniformly distributed angles and drawing the connecting lines:

\documentclass[border=3pt,tikz]{standalone} \usepackage{amsmath} % for aligned \usepackage{listofitems} % for \readlist to create arrays \usetikzlibrary{arrows.meta} % for arrow size \usepackage[outline]{contour} % glow around text \contourlength{1.4pt} % COLORS \usepackage{xcolor} \colorlet{myred}{red!80!black} \colorlet{myblue}{blue!80!black} \colorlet{mygreen}{green!60!black} \colorlet{myorange}{orange!70!red!60!black} \colorlet{mydarkred}{red!30!black} \colorlet{mydarkblue}{blue!40!black} \colorlet{mydarkgreen}{green!30!black} % STYLES \tikzset{ >=latex, % for default LaTeX arrow head node/.style={thick,circle,draw=myblue,minimum size=22,inner sep=0.5,outer sep=0.6}, node in/.style={node,green!20!black,draw=mygreen!30!black,fill=mygreen!25}, node hidden/.style={node,blue!20!black,draw=myblue!30!black,fill=myblue!20}, node convol/.style={node,orange!20!black,draw=myorange!30!black,fill=myorange!20}, node out/.style={node,red!20!black,draw=myred!30!black,fill=myred!20}, connect/.style={thick,mydarkblue}, %,line cap=round connect arrow/.style={-{Latex[length=4,width=3.5]},thick,mydarkblue,shorten <=0.5,shorten >=1}, node 1/.style={node in}, % node styles, numbered for easy mapping with \nstyle node 2/.style={node hidden}, node 3/.style={node out} } \def\nstyle{int(\lay<\Nnodlen?min(2,\lay):3)} % map layer number onto 1, 2, or 3 \begin{document} % NEURAL NETWORK with coefficients, uniform arrows \newcommand\setAngles[3]{ \pgfmathanglebetweenpoints{\pgfpointanchor{#2}{center}}{\pgfpointanchor{#1}{center}} \pgfmathsetmacro\angmin{\pgfmathresult} \pgfmathanglebetweenpoints{\pgfpointanchor{#2}{center}}{\pgfpointanchor{#3}{center}} \pgfmathsetmacro\angmax{\pgfmathresult} \pgfmathsetmacro\dang{\angmax-\angmin} \pgfmathsetmacro\dang{\dang<0?\dang+360:\dang} } \begin{tikzpicture}[x=2.2cm,y=1.4cm] \message{^^JNeural network with uniform arrows} \readlist\Nnod{4,5,5,5,3} % array of number of nodes per layer \foreachitem \N \in \Nnod{ % loop over layers \def\lay{\Ncnt} % alias of index of current layer \pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer \message{^^J Layer \lay, N=\N, prev=\prev ->} % NODES \foreach \i [evaluate={\y=\N/2-\i; \x=\lay; \n=\nstyle;}] in {1,...,\N}{ % loop over nodes \message{N\lay-\i, } \node[node \n] (N\lay-\i) at (\x,\y) {$a_\i^{(\prev)}$}; } % CONNECTIONS \foreach \i in {1,...,\N}{ % loop over nodes \ifnum\lay>1 % connect to previous layer \setAngles{N\prev-1}{N\lay-\i}{N\prev-\Nnod[\prev]} % angles in current node %\draw[red,thick] (N\lay-\i)++(\angmin:0.2) --++ (\angmin:-0.5) node[right,scale=0.5] {\dang}; %\draw[blue,thick] (N\lay-\i)++(\angmax:0.2) --++ (\angmax:-0.5) node[right,scale=0.5] {\angmin, \angmax}; \foreach \j [evaluate={\ang=\angmin+\dang*(\j-1)/(\Nnod[\prev]-1);}] %-180+(\angmax-\angmin)*\j/\Nnod[\prev] in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer \setAngles{N\lay-1}{N\prev-\j}{N\lay-\N} % angles out from previous node \pgfmathsetmacro\angout{\angmin+(\dang-360)*(\i-1)/(\N-1)} % number of previous layer %\draw[connect arrow,white,line width=1.1] (N\prev-\j.{\angout}) -- (N\lay-\i.{\ang}); \draw[connect arrow] (N\prev-\j.{\angout}) -- (N\lay-\i.{\ang}); % connect arrows uniformly } \fi % else: nothing to connect first layer } } % LABELS \node[above=5,align=center,mygreen!60!black] at (N1-1.90) {input\\[-0.2em]layer}; \node[above=2,align=center,myblue!60!black] at (N3-1.90) {hidden layers}; \node[above=8,align=center,myred!60!black] at (N\Nnodlen-1.90) {output\\[-0.2em]layer}; \end{tikzpicture} \end{document}Thank you for reporting this issue.

Hello Sir,

First off. Thank you for these great examples. I’ve used your code for “Inserting ellipses between the last two rows” but I have an issue I am struggling to resolve. My output layer is a single neuron, i.e my final layer is simply \hat{y}. The three horizontal dots remain and if I try to remove them, it seems they are removed from the input – and hidden layer as well. How can I resolve this? Thank you so much.

Hi Luciano,

Thank you for the kind words.

It is not so hard to achieve this. You need to insert an extra condition that the last node in a layer is only shifted if the layer is not the last layer. This is the condition is

\lay<\Nnodlen, with layer index\lay, and total number of layers\Nnodlen, i.e. the length of list/array\Nnod.Note that

\Nnodlenis automatically created by\readlist\Nnod{...}, and that\layis my alias for\Ncnt, which is the index created by the loop over this array via\foreachitem \N \in \Nnod {...}.\documentclass[border=3pt,tikz]{standalone} \usepackage{amsmath} % for aligned \usepackage{listofitems} % for \readlist to create arrays \usetikzlibrary{arrows.meta} % for arrow size \usepackage[outline]{contour} % glow around text \contourlength{1.4pt} % COLORS \usepackage{xcolor} \colorlet{myred}{red!80!black} \colorlet{myblue}{blue!80!black} \colorlet{mygreen}{green!60!black} \colorlet{mydarkblue}{blue!40!black} % STYLES \tikzset{ >=latex, % for default LaTeX arrow head node/.style={thick,circle,draw=myblue,minimum size=22,inner sep=0.5,outer sep=0.6}, node in/.style={node,green!20!black,draw=mygreen!30!black,fill=mygreen!25}, node hidden/.style={node,blue!20!black,draw=myblue!30!black,fill=myblue!20}, node out/.style={node,red!20!black,draw=myred!30!black,fill=myred!20}, connect/.style={thick,mydarkblue}, %,line cap=round connect arrow/.style={-{Latex[length=4,width=3.5]},thick,mydarkblue,shorten <=0.5,shorten >=1}, node 1/.style={node in}, % node styles, numbered for easy mapping with \nstyle node 2/.style={node hidden}, node 3/.style={node out} } \def\nstyle{int(\lay<\Nnodlen?min(2,\lay):3)} % map layer number onto 1, 2, or 3 \begin{document} % NEURAL NETWORK with coefficients, shifted \begin{tikzpicture}[x=2.2cm,y=1.4cm] \message{^^JNeural network, shifted} \readlist\Nnod{4,5,5,4,1} % array of number of nodes per layer \readlist\Nstr{n,m,m,m,k} % array of string number of nodes per layer \readlist\Cstr{\strut x,a^{(\prev)},a^{(\prev)},a^{(\prev)},y} % array of coefficient symbol per layer \def\yshift{0.5} % shift last node for dots \message{^^J Layer} \foreachitem \N \in \Nnod{ % loop over layers \def\lay{\Ncnt} % alias of index of current layer \pgfmathsetmacro\prev{int(\Ncnt-1)} % number of previous layer \message{\lay,} \foreach \i [evaluate={% \isLastNode=int(\i==\N); % last node in this layer \isLastLayer=int(\lay==\Nnodlen); % last layer overall \y=\N/2-\i-(\isLastLayer?0.5:\isLastNode)*\yshift; %\y=\N/2-\i-(\i==\N && \lay<\Nnodlen)*\yshift; % add shift for last node, except for last layer \index=(\i<\N? \i:"\Nstr[\lay]"); \x=\lay; \n=\nstyle;% }] in {1,...,\N}{ % loop over nodes % NODES \node[node \n] (N\lay-\i) at (\x,\y) {$\Cstr[\lay]_{\index}$}; % CONNECTIONS \ifnum\lay>1 % connect to previous layer \foreach \j in {1,...,\Nnod[\prev]}{ % loop over nodes in previous layer \draw[connect,white,line width=1.2] (N\prev-\j) -- (N\lay-\i); \draw[connect] (N\prev-\j) -- (N\lay-\i); } \fi % else: nothing to connect first layer } \ifnum\lay<\Nnodlen % add ellipses between two last nodes (except for last layer) \path (N\lay-\N) --++ (0,1+\yshift) node[midway,scale=1.5] {$\vdots$}; \fi } % LABELS \node[above=5,align=center,mygreen!60!black] at (N1-1.90) {input\\[-0.2em]layer}; \node[above=2,align=center,myblue!60!black] at (N3-1.90) {hidden layers}; \node[above=10,align=center,myred!60!black] at (N\Nnodlen-1.90) {output\\[-0.2em]layer}; \end{tikzpicture} \end{document}Normally, a shift is added to the last node in a layer. To make the last layer a bit more vertically symmetric, you could also add a "constant" half-shift to all nodes in the last layer by using

\y=\N/2-\i-(\isLastLayer?0.5:\isLastNode)*\yshift;Hope that helps & best of luck,

Izaak

Thank you so much for the fast, and very informative answer.

Enjoy your weekend Izaak!